In C++ programming, optimization goes beyond just tweaking code for performance gains. It fundamentally revolves around making smart design choices. Here’s why:

- Algorithm Selection: The choice of algorithm can drastically affect performance. Using an O(n log n) sort algorithm over an O(n^2) one is a prime example.

- Data Structures: Choosing the right data structure (e.g., using a hash table instead of a linked list for fast lookups) can lead to significant efficiency improvements.

- Memory Management: Efficient memory usage and minimizing allocations/deallocations can enhance performance. Techniques like memory pooling or using smart pointers properly can make a big difference.

- Concurrency and Parallelism: Designing systems that effectively use multiple threads or processes can improve performance. C++11 introduced standard support for threading, which aids in this.

- Avoiding Premature Optimization: Focusing on clean, maintainable code first and optimizing critical sections later is usually more effective.

Doxygen Case Study: Fighting memory thrashing Through Design Choices

When the processes running on your machine attempt to allocate more memory than your system has available, the kernel begins to swap memory pages to and from the disk. This is done in order to free up sufficient physical memory to meet the RAM allocation requirements of the requestor.

Excessive use of swapping is called thrashing and is undesirable because it lowers overall system performance, mainly because hard drives are far slower than RAM.

If your application needs to use a large amount of data, you will be exposed to thrashing and your application could slow dramatically. Two solutions exist: either optimize your applications to use memory more efficiently or add more physical RAM to the system.

Let’s discover which solution Doxygen uses, to optimize its memory usage and avoid the thrashing problem.

Doxygen is the de facto standard tool for generating documentation from annotated C++ sources, but it also supports other popular programming languages such as C, Objective-C, C#, PHP, Java, Python, and many others. Thanks to Dimitri van Heesch for his great effort to develop and maintain the project.

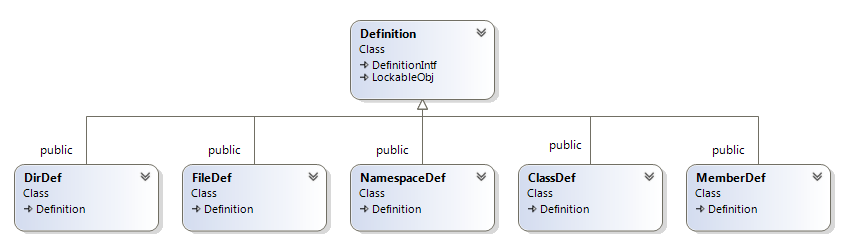

Doxygen takes as an entry the source files, parse them to extract the needed data and store the result in class instances of kind DirDef, FileDef, NamespaceDef, ClassDef, and MemberDef. All inherit from the Definition class .

The instances of these classes will be used after to generate the documentation. The data consuming more memory are these concerning the information about methods and variables which are represented by the MemberDef class, the size of these instances could growth to exceeds more than 1 Go, it depends on the number of methods and variables of the projects treated.

For some projects store, all these instances in memory will impact the system performance, and the generation of the documentation could take many hours.

How Doxygen optimize the memory?

Doxygen uses a solution based on the disk cache, using a disk cache is a popular way to optimize your memory usage. The idea is to store in a file the data needed to be in memory; this cache will contain many slots, each one containing a specific piece of data, and some slots will be released if the cache size exceeds a certain value. The data released will be present on disk, and if we need them again they will be moved to memory.

In case of Doxygen the algorithm is very simple:

- Defines a cache with 65535 slots.

- When a MemberDef instance needs to be created, Doxygen checks if a cache slot is available, if it’s the case the instance will be created in memory, else it’s stored in a data file in the disk, and an index file is updated to store where in the data file this data is stored.

- If Doxygen needs to access a MemberDef instance, it checks its presence in the cache. If it’s not present, Doxygen gets from the index file where its data are stored, seek to this position in the data file and loads it from the Disk.

The performance of the cache depends on:

- The container: It could be a queue, an array, a list or maybe a custom container. The choice of one of these containers could impact your cache performance.

- The max size of the cache.

- The algorithm used to free entries from the cache. When the cache reaches its maximum, you have to decide which entries to release, for example, you could:

– Release the first slots loaded.

– Release the latest slots loaded.

– Release the less-used slots.

1- The Container

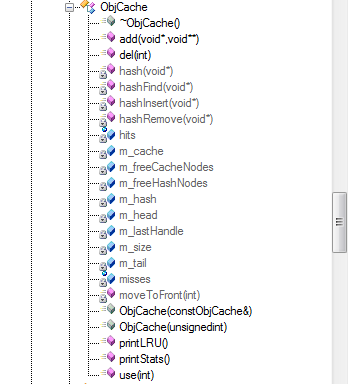

Doxygen defines the ObjCache class which is a linked list of CacheNode class, this class is responsible to add and remove instances from the cache.

Here’s how Doxygen declare its cache:

Doxygen::symbolCache =new ObjCache(16+cacheSize);// 16 -> room for 65536 elements,

2 – Cache size

Doxygen gets the cache max size from the configuration file:

int cacheSize =Config_getInt("SYMBOL_CACHE_SIZE");

It’s a good idea to let this parameter be configurable, so you can increase the cache if you have a machine with a big physical memory size to increase the cache performance. However, for the newer Doxygen releases, this parameter is removed from the configuration file, and a default value is used.

3-The algorithm to release entries from the cache

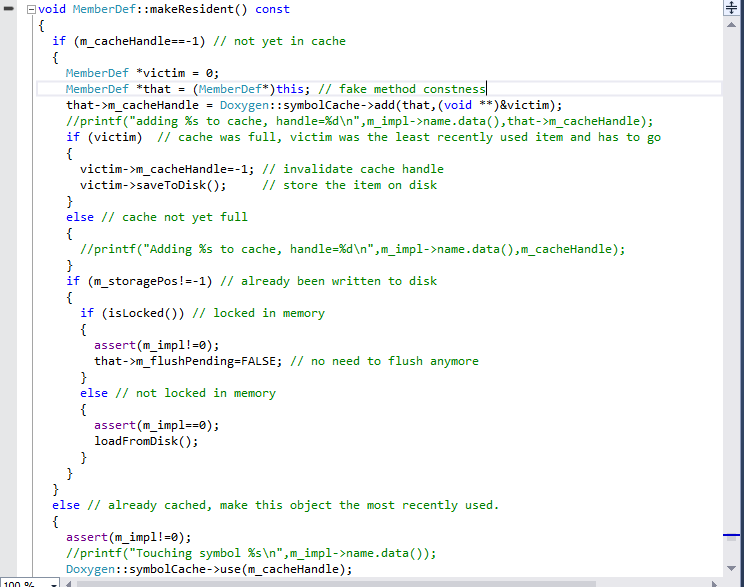

Here’s the code snippet from Doxygen code source responsible for releasing the cache when it reaches its maximum:

As specified in the makeResident method code, which is very well commented, the least recently used item is removed if the cache is full.

This method is invoked for almost all MemberDef methods, it’s called each time you have to access the MemberDef state to check if this member is loaded or not. Load it if it’s not the case and remove the least recently used member from the cache.

The impact of using the cache

Using the cache could increase the performance of your application, but did the cache bring a big optimization or just a micro optimization and it’s not worth to add it in your application?

Before using Clang as C/C++ parser for our product, we used Doxygen as a parser for our first version, we did many tests on cache size, when we disabled the cache and parse some C++ projects with this modified version, the parsing time has much increased, sometimes from 5 min to 25 min. For big projects it takes hours and it impacts a lot the system performance.

Conclusion

Effective C++ optimization is deeply rooted in making informed design choices. By selecting the right algorithms, data structures, and memory management techniques, and by leveraging concurrency where appropriate, developers can achieve significant performance improvements. This strategic approach to design is what truly drives optimization in C++.